Paper Review - Dynamic Tensor Rematerialization

Dynamic Tensor Rematerialization (DTR) treats GPU memory as a large cache, where tensors can be evicted to save memory, and recomputed if needed later.

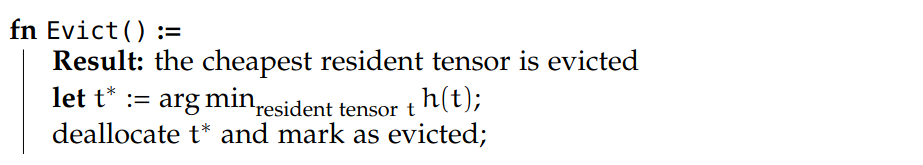

DTR’s eviction policy relies on the heuristic \(h\). The heuristic assigns a value \(h(t)\) to each resident tensor \(t\), approximating the cost of evicting the tensor. DTR evicts the tensor with the lowest cost based on the value of \(h\). \(h\) can factor in arbitrary metadata.

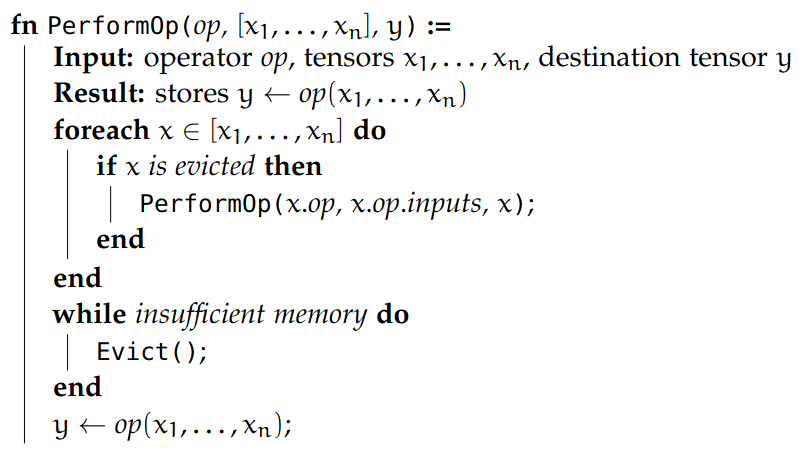

During every operator call in PyTorch, DTR intercepts the call and performs the following tasks:

In short, whenever we perform an operation, we first recursively re-calculate all the non-resident tensors the current operation depends on, while evicting tensors we don’t need until there are enough GPU space left. To decide which tensors to evict, DTR uses the tensor with the lowest value \(h\):

The heuristic \(h\) evicts tensors based on three properties: staleness, size, and compute cost. It evicts tensors that are: least recently used, takes large GPU memory space, and easy to recompute. \(H _{DTR}\) is computed as:

\[ h _{DTR}(s, m, c) (t) := \frac{c(t)}{m(t) \cdot s(t)’} \]

Recomputing an evicted tensor \(t\) may result in recomputing many more tensors that \(t\) recursively depends on. Thus, the paper proposes an improved heuristic to take the recursive recomputations into account (with more maintenance cost). These tensors are called evicted neighborhood \(e ^{*} (t)\).

\[ h_ {DTR-improved}(s, m, c) (t) := \frac{c(t) + \sum _{u \in e ^{*} (t)} c(u)}{m(t) \cdot s(t)’} \]

This heuristic captures the recomputation costs for all tensors that \(t\) recursively depend on.