Paper Review - Capuchin: Tensor-based GPU Memory Management for Deep Learning

This paper aims to reduce GPU memory usage during DNN training. Capuchin achieves this goal though swapping and recomputation, using tensor as unit of operation. The major question is how to balance between swapping and recomputation to achieve max resource utilization.

Swap and Recomputation Benefit

The ultimate goal of swapping and recomputation is to hide the overhead as much as possible to minimize the wait time of back-access (a tensor evicted earlier being accessed again). For swapping, we should increase the overlap between swapping and computing; for recomputation, we should use cheap operations.

Determining Tensor Re-generation Cost

For swapping, it is usually not optimal to swap back in a tensor only when we access it. The reason is copying tensor from CPU memory to GPU memory usually introduces overhead greater than the computation itself. It’s thus better to swap in a tensor earlier or proactively.

The paper uses in-trigger as the term. It means we use other tensor access between evicted-access (a tensor access that triggers the self-eviction after used in the computation) and back-access to bring back an evicted tensor a little bit earlier.

Of course, this may raise two questions:

- How do we know when in-trigger should happen?

- How to deal with PCIe lane interferences? E.g. one swap-in may happen later than in-trigger due to a previous swap-in still not finished.

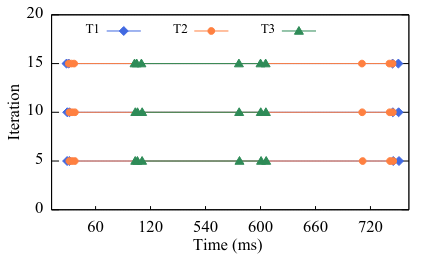

The answer is quite simple. We use the runtime feedback at the back-access of a tensor. If the tensor is still being swapped in, it means the in-trigger time should be adjusted earlier. Note, this is based on the assumption of regular tensor access pattern in deep learning training, as illustrated in the paper.

Recomputation, on the other hand, is performed only in on-demand manner. No in-trigger is used for recomputation.

Capuchin relies on the principle that swap can be largely overlapped with computation, while recomputation will certainly incur performance penalty. Thus, it chooses swapping as the first choice until we cannot choose an in-trigger to perfectly hide prefetching overhead.

One thing to note here is when we select a tensor \(T\) to be recomputed, but such tensor relies on another tensor that is evicted, then we need to recompute the parent of the evicted tensor instead. This could potentially happen multiple times if more recomputation targets tensor \(T\). In short, recomputation and swapping cannot occur at the same time.

For more information, please refer to the original paper.